Picture your organization processing 850,000 events per second with sub-10 millisecond latency, just like telecommunications giants do. Perhaps tomorrow you will not need to process over a billion API requests daily to power its global streaming service, as Netflix does. However, these staggering numbers are best to demonstrate how event-driven architecture transforms rigid legacy systems into responsive, scalable platforms that adapt to modern business demands. By replacing synchronous request-response patterns with asynchronous event streams, organizations achieve an impressive 78% reduction in system bottlenecks.

EDA architecture foundations for legacy system transformation

Breaking free from traditional architectural constraints requires understanding how event-driven architecture changes the way systems communicate and process information. Unlike conventional approaches where components wait for responses before proceeding, EDA enables parallel processing through asynchronous communication patterns. This architectural evolution becomes particularly powerful when transforming monolithic systems that struggle with modern scalability demands.

Defining event-driven architecture and its core components

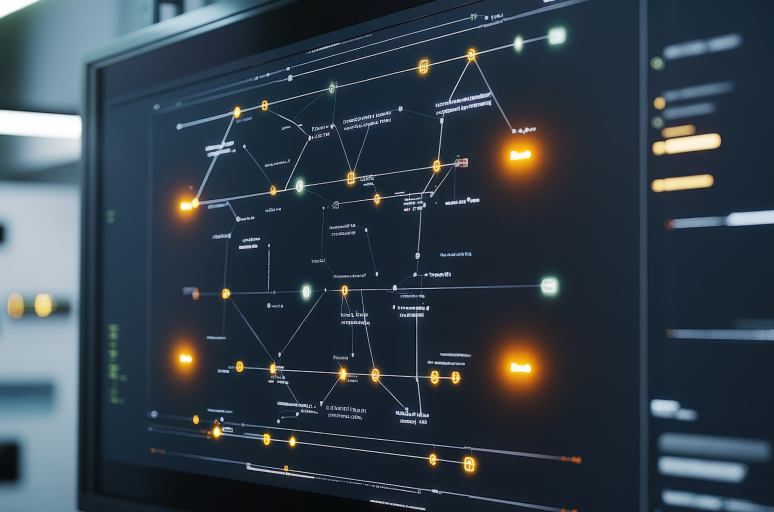

At its core, event-driven architecture relies on four interconnected layers designed to sense, transport, interpret, and act on business moments as they happen in a distributed environment.

The process begins with event producers, which are the system’s sensory organs. These producers range from user interfaces generating click events to IoT devices streaming temperature data at millisecond intervals. In other words, they capture significant activities, from an e-commerce checkout to a real-time financial transaction, and encapsulate them as discrete, standardized event messages. These sources can be as varied as a mobile application logging user engagement or an industrial sensor reporting pressure fluctuations.

This stream of events flows through the event channel, a transport backbone that leverages technologies like message brokers or streaming logs to form a reliable data pipeline to all interested systems. Central to this flow are event brokers, which function as sophisticated switchboards. They ingest the massive influx of events, filter them based on content or topic, and route them to all subscribed parties.

The event processing engine then consumes these events and applies predefined business logic – for instance, when a "payment successful" event is detected, it can trigger a chain reaction: dispatching a shipping order, sending a confirmation email, and updating the customer's loyalty points balance.

Finally, event consumers are the downstream services that receive these events and perform the final work, such as writing data to a data warehouse, updating a user's real-time dashboard, or invoking an external API.

How EDA differs from synchronous request-response models

Event-driven design stands in stark contrast to legacy request-response systems, where services are tightly coupled. In that model, clients wait for server responses before proceeding, creating bottlenecks that cascade through entire systems during peak loads. Event-driven architecture eliminates these constraints through asynchronous communication – producers publish events without waiting for acknowledgment, enabling continuous operation regardless of consumer availability.

This decoupling means services scale independently; when order processing experiences high demand, organizations can deploy additional consumer instances without modifying the entire system. During Black Friday sales, retailers can spin up additional order processing instances to handle demand spikes up to 300% above baseline without modifying the core commerce platform. Traditional systems would require expensive vertical scaling or complex load balancing configurations to achieve similar results. This architectural flexibility enables organizations to scale specific services based on actual demand patterns rather than provisioning for worst-case scenarios across all components.

Key benefits of event-driven design for enterprise modernization

The decoupling of system components enables an evolutionary modernization strategy where legacy systems function as event producers. New microservices can then be developed as event consumers to introduce modern features without direct coupling. This methodology circumvents the risks inherent to "big bang" migrations, which are frequently compromised by their operational complexity.

Fault tolerance is also significantly enhanced in event-driven interactions; should a consuming service become unavailable, the event broker persists messages in a queue until recovery is complete. This design guarantees data integrity through automated retries for transient failures and the use of dead-letter queues to isolate non-processable messages.

Beyond the technical improvements, this architectural pattern delivers quantifiable business value, demonstrated by productivity gains of 23% reported by adopting organizations.

The enhanced agility manifests in reduced coordination overhead between development teams. New features can subscribe to existing event streams without requiring changes to event producers. This independence accelerates time-to-market for new capabilities while reducing the risk of introducing bugs into stable systems.

Legacy system challenges that event-driven platforms solve

Monolithic applications suffer from performance limitations where all components must scale together regardless of individual resource requirements, forcing organizations to overprovision infrastructure for components that rarely experience high load. The U.S. federal government alone spends $337 million annually on just ten critical mainframe systems, illustrating the financial burden of maintaining outdated architectures.

Development teams face increasingly complex maintenance cycles where simple feature additions require weeks of testing across interconnected modules. Deployment constraints mandate simultaneous updates across all system components, forcing organizations into rigid release schedules that cannot respond quickly to market opportunities.

Tight coupling between system components

Cascading failures represent critical risks where payment processing errors can simultaneously crash inventory management, customer service, and reporting functions. When one component experiences issues, the entire system becomes unstable, particularly problematic during peak business periods when reliability matters most. Feature delivery delays result from extensive coordination requirements across tightly coupled components, with organizations experiencing months-long delays for capabilities that loosely coupled architectures implement in days.

Batch processing limitations in real-time business environments

Data freshness issues plague organizations relying on nightly batch updates for critical business information, creating decision-making gaps where managers operate with outdated data. Customers expecting immediate order confirmations or account balance updates encounter frustrating delays when systems process transactions in periodic batches. Competitive disadvantages emerge as real-time competitors adjust pricing and inventory instantly while batch-dependent organizations react to yesterday's market conditions, losing sales opportunities during rapidly changing demand periods.

Integration complexity with modern applications

Security vulnerabilities multiply as legacy systems integrate through outdated communication methods lacking modern encryption or access controls, expanding attack surfaces that threaten organizational data. API proliferation creates maintenance nightmares where legacy systems require dozens of custom integration points, each demanding individual security updates and version management. Data synchronization problems arise when multiple systems maintain separate copies without reliable consistency mechanisms, leading to customer service issues and compliance risks.

Event-driven architecture examples across industries

Real-world implementations demonstrate how event-driven architecture delivers measurable business value across diverse sectors. These transformations showcase practical approaches to modernizing legacy systems while maintaining operational continuity. Organizations achieve significant improvements in efficiency, customer satisfaction, and competitive positioning through strategic EDA adoption.

Financial services transformation with real-time transaction processing

Major banks process an average of 500,000 transactions per second. One of the most impressive examples of successful transformation is Citi, which scaled its commercial cards API platform using event-driven microservices, processing millions of monthly requests while maintaining operational excellence. The implementation separated complex workflows into manageable events, enabling independent scaling during demand spikes. Fraud detection systems now are able to identify suspicious patterns 75% faster. Regulatory compliance benefits from comprehensive audit trails where every transaction generates immutable event logs, automatically triggering alerts when thresholds exceed regulatory limits.

E-commerce platform evolution using event-driven microservices

Target implemented event-driven systems across stores for real-time inventory management, enabling immediate stock checks and personalized customer assistance through handheld devices. Order processing acceleration reduces fulfillment times from hours to minutes as events trigger parallel processing across payment verification, inventory allocation, and shipping coordination. Customer journey tracking captures browsing, purchase, and service interactions to build comprehensive profiles supporting personalized recommendations that increase lifetime value.

Healthcare patient record synchronization

Healthcare organizations process up to 1 million documents daily through cloud-native platforms integrating legacy applications with modern microservices for just-in-time delivery. Medical device connectivity enables continuous monitoring through IoT integration, generating event streams from vital signs and diagnostic equipment that trigger immediate alerts when conditions change. Appointment scheduling coordinates across multiple systems through events that update electronic health records, billing systems, and provider calendars automatically, reducing conflicts while improving resource utilization.

Manufacturing IoT sensor data processing

Manufacturers implement digital factory initiatives linking production orders with factory systems, CRM, ERP, and supply chain platforms through event-driven architecture. Smart vibration sensors monitor equipment continuously, triggering maintenance requests automatically when readings exceed ISO 10816 thresholds. These predictive maintenance systems analyze event streams to identify potential failures before occurrence, preventing costly downtime and quality issues that impact customer satisfaction.

Telecommunications network event processing

Modern networks maintain 99.999% availability with sub-10 millisecond processing latency. With event-based architecture, billing systems handle complex rating and charging events in real-time, supporting dynamic pricing models and spending controls that enhance customer experience while preventing revenue leakage.

Retail customer journey tracking

Recommendation systems process customer behavior events during shopping sessions – product views, cart additions, and purchases immediately update algorithms to provide relevant suggestions. Loyalty programs benefit from instant event processing where purchase events and engagement activities automatically update balances and trigger personalized rewards. When customers contact support, recent events from purchases and website visits automatically populate representative dashboards, enabling effective issue resolution.

Implementation roadmap for event-driven architecture migration

Successfully implementing event-driven architecture depends on a structured methodology. This methodology must be crafted to proactively reduce risk, systematically enhance team competencies, and deliver demonstrable business outcomes. Organizations are required to weigh technical complexity against commercial objectives to map out an achievable and effective path for adoption.

Assessment phase for legacy system evaluation

Technical debt analysis reveals systems consuming disproportionate resources through comprehensive evaluation of architectures, integration patterns, and performance constraints. Dependency mapping identifies complex relationships between 8-12 distinct financial platforms typical enterprises manage simultaneously. Modernization priority ranking sequences efforts for maximum impact, focusing on systems with high maintenance costs or frequent performance issues.

Proof of concept development with pilot event-driven applications

Technology stack selection evaluates platforms like Apache Kafka for high-throughput streaming or AWS EventBridge for cloud-native integration based on specific requirements. Minimal viable products demonstrate clear value through simple implementations like notification systems or audit logging that provide immediate benefits without disrupting operations. Stakeholder validation ensures implementations address real business needs while building confidence through regular demonstrations and feedback sessions.

Phased migration strategy with risk management protocols

Critical migration phases include:

- Service extraction sequencing – which begins with peripheral services having minimal dependencies before attempting core system modernization. For example, extracting customer notification services first allows teams to gain experience without risking critical transaction processing.

- Rollback procedures – which provide safety nets through predefined criteria and automated mechanisms ensuring rapid recovery. For instance, automatic rollback triggers when error rates exceed 5% or response times double from baseline measurements.

- Performance monitoring – which tracks both technical metrics like processing latency and business metrics like transaction completion rates throughout migration phases. For example, real-time dashboards display event throughput alongside customer satisfaction scores.

These phases enable incremental progress while maintaining operational stability. Organizations implementing structured approaches report smoother transitions with fewer production incidents.

Team preparation and skill development requirements

Training programs address technical skills through hands-on workshops with event streaming technologies and distributed system design principles. Hiring strategies target professionals with distributed systems experience while creating alternative career paths for technical experts preferring individual contributor roles. Knowledge transfer processes implement communities of practice and documentation standards capturing EDA expertise across teams.

Technology stack selection and infrastructure planning

Event broker evaluation assesses throughput requirements and integration capabilities across platforms, with Gartner identifying three distinct broker classes for different use cases. Cloud platform choices balance managed service convenience against control requirements and vendor lock-in concerns. Monitoring tool integration ensures comprehensive observability through metrics, logs, and distributed tracing from implementation day one.

Event-driven software architecture best practices and common pitfalls

Avoiding common architectural mistakes while following proven patterns ensures reliable, maintainable event-driven systems. These guidelines help organizations maximize EDA benefits while minimizing operational complexity.

Event schema design and versioning strategies

Backward compatibility maintenance prohibits breaking changes – always adding fields while never removing or renaming existing ones eliminates version conflicts. PostNL successfully implemented this approach with custom validation capabilities ensuring consumers continue operating normally. Evolution planning includes extensibility mechanisms like optional metadata fields accommodating future requirements without breaking integrations. Contract management implements automated validation and compatibility testing with explicit approval processes for any interface changes.

Monitoring and observability implementation for distributed systems

Key monitoring capabilities of event-driven integration include:

- distributed tracing – captures complete event journeys from production through consumption, enabling rapid bottleneck identification. For example, OpenTelemetry maintains context across service boundaries revealing processing delay,

- metrics collection – tracks processing rates, queue depths, and error rates alongside business outcomes. For instance, correlating event throughput with revenue impact demonstrates EDA value,

- alerting configuration – balances sensitivity with practicality through severity levels and context-aware notifications. For example, intelligent alerting correlates related events reducing noise from transient conditions.

These capabilities provide comprehensive system visibility essential for maintaining performance. Organizations implementing robust observability report significant reductions in troubleshooting time.

Avoiding over-engineering and maintaining system simplicity

Event granularity decisions balance flexibility against overhead – fine-grained events provide maximum decoupling but create excessive network traffic while coarse-grained events reduce load but limit reusability. Service boundary definition requires careful domain analysis creating appropriate microservice boundaries that minimize cross-service dependencies. Complexity management prioritizes simple, proven patterns over sophisticated architectures unless specific requirements justify additional operational overhead.

Partner with us for your event-driven architecture transformation

We invite you to partner with us for your event-driven architecture transformation. At RST we focus on custom EDA implementation programs designed to guide your organization through the complete lifecycle, from assessment to deployment, with a clear focus on delivering business value. Contact us today to see measurable advancements in your system performance, operational efficiency, and customer satisfaction, all aligned precisely with your organization’s unique requirements.